目录

2 基于神经网络的转移依存句法分析

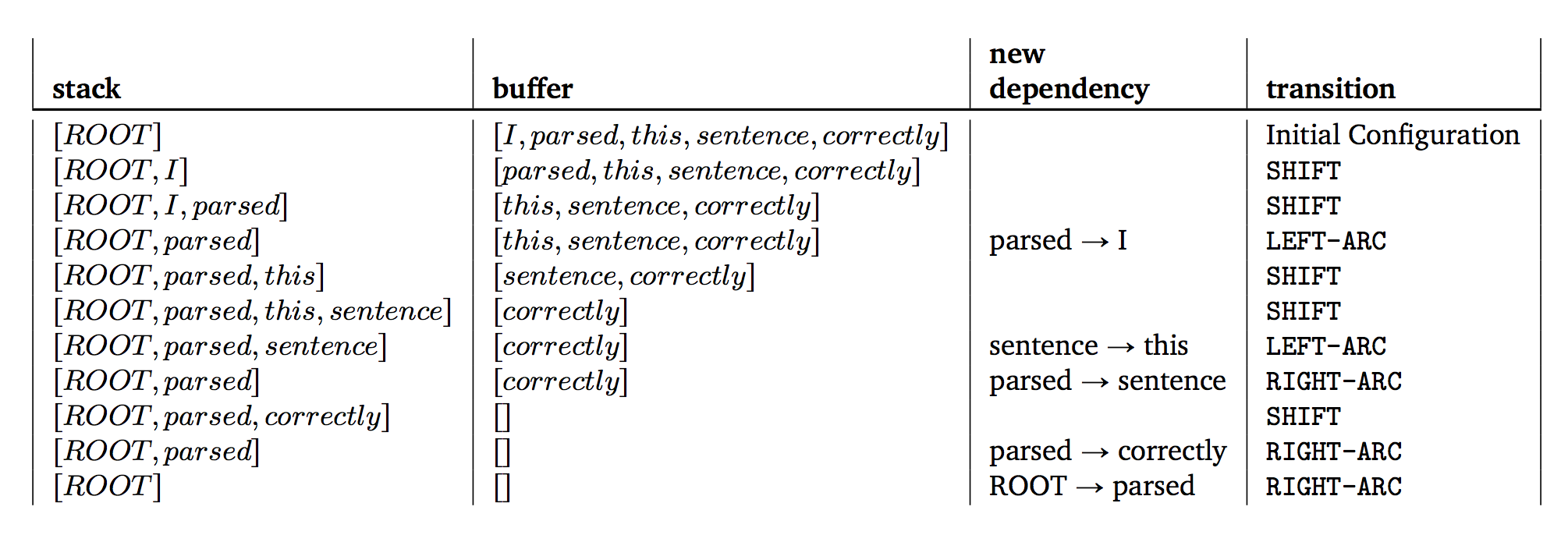

a 手工完成Transition-Based Dependency Parsing

b 复杂度

一个含有$n$个单词的句子会花费多少个步骤?

$2n$,每个单词shift一步,arc一步。

c 初始化 & parse_step

PartialParse初始化:

def __init__(self, sentence): """Initializes this partial parse. Your code should initialize the following fields: self.stack: The current stack represented as a list with the top of the stack as the last element of the list. self.buffer: The current buffer represented as a list with the first item on the buffer as the first item of the list self.dependencies: The list of dependencies produced so far. Represented as a list of tuples where each tuple is of the form (head, dependent). Order for this list doesn't matter. The root token should be represented with the string "ROOT" Args: sentence: The sentence to be parsed as a list of words. Your code should not modify the sentence. """ # The sentence being parsed is kept for bookkeeping purposes. Do not use it in your code. self.sentence = sentence ### YOUR CODE HERE self.stack = ['ROOT'] self.buffer = sentence[:] self.dependencies = [] ### END YOUR CODE

转移动作:

def parse_step(self, transition): """Performs a single parse step by applying the given transition to this partial parse Args: transition: A string that equals "S", "LA", or "RA" representing the shift, left-arc, and right-arc transitions. """ ### YOUR CODE HERE if transition == "S": self.stack.append(self.buffer[0]) self.buffer.pop(0) elif transition == "LA": self.dependencies.append((self.stack[-1], self.stack[-2])) self.stack.pop(-2) else: self.dependencies.append((self.stack[-2], self.stack[-1])) self.stack.pop(-1) ### END YOUR CODE

d Minibatch Parsing

得益于TF强大的矩阵加速能力,你可以将多个句子同时传入。实现如下:

def minibatch_parse(sentences, model, batch_size): """Parses a list of sentences in minibatches using a model. Args: sentences: A list of sentences to be parsed (each sentence is a list of words) model: The model that makes parsing decisions. It is assumed to have a function model.predict(partial_parses) that takes in a list of PartialParses as input and returns a list of transitions predicted for each parse. That is, after calling transitions = model.predict(partial_parses) transitions[i] will be the next transition to apply to partial_parses[i]. batch_size: The number of PartialParses to include in each minibatch Returns: dependencies: A list where each element is the dependencies list for a parsed sentence. Ordering should be the same as in sentences (i.e., dependencies[i] should contain the parse for sentences[i]). """ ### YOUR CODE HERE # refer: https://github.com/zysalice/cs224/blob/master/assignment2/q2_parser_transitions.py partial_parses = [PartialParse(s) for s in sentences] unfinished_parse = partial_parses while len(unfinished_parse) > 0: minibatch = unfinished_parse[0:batch_size] # perform transition and single step parser on the minibatch until it is empty while len(minibatch) > 0: transitions = model.predict(minibatch) for index, action in enumerate(transitions): minibatch[index].parse_step(action) minibatch = [parse for parse in minibatch if len(parse.stack) > 1 or len(parse.buffer) > 0] # move to the next batch unfinished_parse = unfinished_parse[batch_size:] dependencies = [] for n in range(len(sentences)): dependencies.append(partial_parses[n].dependencies) ### END YOUR CODE return dependencies

e Xavier初始化

为了防止神经元之间过度依赖,常用的技巧之一是Xavier Initialization。给定$m \times n$大小的$\boldsymbol{A}$,按照$[-\epsilon, \epsilon]$区间的均匀分布生成每个元素$A_{ij}$:

$$\begin{align}

\epsilon &= \frac{\sqrt{6}}{\sqrt{m + n}}

\end{align}$$

实现如下:

def xavier_weight_init(): """Returns function that creates random tensor. The specified function will take in a shape (tuple or 1-d array) and returns a random tensor of the specified shape drawn from the Xavier initialization distribution. Hint: You might find tf.random_uniform useful. """ def _xavier_initializer(shape, **kwargs): """Defines an initializer for the Xavier distribution. Specifically, the output should be sampled uniformly from [-epsilon, epsilon] where epsilon = sqrt(6) / <sum of the sizes of shape's dimensions> e.g., if shape = (2, 3), epsilon = sqrt(6 / (2 + 3)) This function will be used as a variable initializer. Args: shape: Tuple or 1-d array that species the dimensions of the requested tensor. Returns: out: tf.Tensor of specified shape sampled from the Xavier distribution. """ ### YOUR CODE HERE epsilon = np.sqrt(6 / np.sum(shape)) out = tf.Variable(tf.random_uniform(shape=shape, minval=-epsilon, maxval=epsilon)) ### END YOUR CODE return out # Returns defined initializer function. return _xavier_initializer

f Dropout

随机地将隐藏层节点的激活值以概率$p$设为$0$,然后乘上一个常量 $\gamma$ ,这个过程可以写作:

$$\begin{align}

\boldsymbol{h}_{drop} &= \lambda \boldsymbol{d} \circ \boldsymbol{h}

\end{align}$$

其中,$\boldsymbol{d} \in \{0,1\}^{D_{h}}$ ($D_{h}$ 是隐藏层$\boldsymbol{h}$ 单元数) 是一个遮罩向量,每个元素以概率$p$取0,概率$1-p$取1。$\gamma$的选取要保证激活值的期望不变,即对所有$0 \le i \le D_{h}$都有:

$$\begin{align}

\mathbb{E_{p}}[\boldsymbol{h}_{drop}]_{i} &= \boldsymbol{h}_{i}

\end{align}$$

那么给定$p$,$\gamma$ 到底要取多少呢?

$$\gamma = \frac{1}{p}$$

g Adam Optimizer

标准的SGD更新规则:

$$\begin{align}

\boldsymbol{\theta} \leftarrow \boldsymbol{\theta} - \alpha \nabla_{\boldsymbol{\theta}} J_{minibatch}(\boldsymbol{\theta})

\end{align}$$

Adam用的是更加复杂的更新规则,有两个额外的步骤:

1、动量更新,记录一个历史梯度的均值(速度)$m$:

$$\begin{align}

\boldsymbol{m} &\leftarrow \beta_{1}\boldsymbol{m} + (1 - \beta_{1}) \nabla_{\boldsymbol{\theta}} J_{minibatch}(\boldsymbol{\theta})\\

%

\boldsymbol{\theta} &\leftarrow \boldsymbol{\theta} - \alpha\boldsymbol{m}

\end{align}$$

其中$\beta_{1}$ 是一个 0 到 1 (经常取 0.9)的超参数。由于$\beta_{1}$很接近1,所以每次更新量与前一次大致相同,动量减小了更新量的方差,避免了梯度的剧烈震荡。

2、Adam中的学习率还是自适应的,通过记录历史梯度的长度的平方$v$来得到:

$$\begin{align}

\boldsymbol{m} &\leftarrow \beta_{1}\boldsymbol{m} + (1 - \beta_{1}) \nabla_{\boldsymbol{\theta}} J_{minibatch}(\boldsymbol{\theta})\\

%

\boldsymbol{v} &\leftarrow \beta_{2}\boldsymbol{v} + (1 - \beta_{2})( \nabla_{\boldsymbol{\theta}} J_{minibatch}(\boldsymbol{\theta}) \circ \nabla_{\boldsymbol{\theta}} J_{minibatch}(\boldsymbol{\theta})) \\

%

\boldsymbol{\theta} &\leftarrow \boldsymbol{\theta} - \alpha\boldsymbol{m} / \sqrt{\boldsymbol{v}}

\end{align}$$

其中$\circ$ 和 $/$ 都是 elementwise运算。

在Adam中,那些梯度平均较小的参数的更新量会更大。也就是说在损失函数相对于它们的梯度很小的地方也能快速移动,能够快速逃离高原。

h 实现Neural Dependency Parser

要求在开发集上的UAS大于88,loss小于0.07。

就贴个最主要的方法吧:

def add_prediction_op(self):

"""Adds the 1-hidden-layer NN:

h = Relu(xW + b1)

h_drop = Dropout(h, dropout_rate)

pred = h_dropU + b2

Note that we are not applying a softmax to pred. The softmax will instead be done in

the add_loss_op function, which improves efficiency because we can use

tf.nn.softmax_cross_entropy_with_logits

Use the initializer from q2_initialization.py to initialize W and U (you can initialize b1

and b2 with zeros)

Hint: Here are the dimensions of the various variables you will need to create

W: (n_features*embed_size, hidden_size)

b1: (hidden_size,)

U: (hidden_size, n_classes)

b2: (n_classes)

Hint: Note that tf.nn.dropout takes the keep probability (1 - p_drop) as an argument.

The keep probability should be set to the value of self.dropout_placeholder

Returns:

pred: tf.Tensor of shape (batch_size, n_classes)

"""

x = self.add_embedding()

### YOUR CODE HERE

xavier = xavier_weight_init()

with tf.variable_scope("transformation"):

b1 = tf.Variable(tf.random_uniform([self.config.hidden_size,]))

b2 = tf.Variable(tf.random_uniform([self.config.n_classes]))

W = xavier([self.config.n_features * self.config.embed_size, self.config.hidden_size])

U = xavier([self.config.hidden_size, self.config.n_classes])

z1 = tf.matmul(x,W) + b1

h = tf.nn.relu(z1)

h_drop = tf.nn.dropout(h,self.dropout_placeholder)

pred = tf.matmul(h_drop, U) + b2

### END YOUR CODE

return pred

经典的三层网络吧,加了个dropout。在完整数据集上跑完10个epoch花了10分钟,并没有题目上说的要一个小时。可能是自己从源码编译的TensorFlow激活了更多的优化吧。

输出:

924/924 [==============================] - 44s - train loss: 0.0602 Evaluating on dev set - dev UAS: 88.44 New best dev UAS! Saving model in ./data/weights/parser.weights ================================================================================ TESTING ================================================================================ Restoring the best model weights found on the dev set Final evaluation on test set - test UAS: 88.69 Writing predictions Done!

后来加个L2正则,信手调了调参,在测试集上提高了一点点:

924/924 [==============================] - 49s - train loss: 0.0631 Evaluating on dev set - dev UAS: 88.54 New best dev UAS! Saving model in ./data/weights/parser.weights ================================================================================ TESTING ================================================================================ Restoring the best model weights found on the dev set Final evaluation on test set - test UAS: 88.92 Writing predictions Done!

但开发集loss升去了(废话,损失函数多了一项)。考虑到Dropout其实已经是一种正则化,所以这里L2正则化其实没有什么用。

如同《基于神经网络的高性能依存句法分析器》所述,原论文使用了很多技巧,比如将词性等标签也向量化了,分值当然更高。另外,这里使用的词向量仅有50维,只训练了10个epoch,自然没有论文高。

码农场

码农场

我搞到90.5分了,哈哈哈哈哈。

2g(i)中:(以下来自斯坦福官网给出的Solution):Adam使用动量,可以防止梯度更新过快。一是为了在陷入局部最优的时候梯度不为零, 仍然可以逃离局部最优。二是可以让每一次的梯度估计都更加接近数据集整体的梯度。

我想问下,为什么笔记note里面的困惑度perplexity = 2^J,和作业里面定义的困惑度不一样??

dropout那边,如果p是drop的概率的话,gamma应该等于\frac{1}{1-p}

2.b, 每个词进去再出来,应该是2n步,可以数数上面5个词是10步。

xavier初始化是均匀分布,博文里面应该是笔误写成了正态分布

感谢指正

谢谢博主分享!

每次代码都得参考您的= =~~很感谢!

请问在求偏导的时候如何确定一个参数矩阵是否需要转置?

是在求导完式子之后通过观察求导式的各个参数的形状来确定是否需要添加转置操作嘛?

求导求得好乱。。博主能否推荐一下矩阵求导的资料。。感觉和高数学的知识之间有空白。。

stanford视频里面Richard是对各个元素求导,然后把结果写出矩阵形式。

博主这里可能是直接用的线性时的结论,就是左乘右乘分别有公式的。

关于资料,你可以去http://web.stanford.edu/class/cs224n/syllabus.html

有一个补充阅读是讲神经网络梯度计算的,我还没看不知道情况。

我之前是看的一个网友总结的,= =反正我看完,做出来的结果就不对,还觉得正确的解法不符合定理。

就不推荐了。

不是很明白 3.d 关于反向传播计算复杂度的计算,是dJ/dL, dJ/dI, dJ/dH三个计算复杂度的和么? 对于softmax只在最后做一次来讲,传播 t 次是否应该是前两项乘上 t,而最后一项只算一次呢?

1. 是的

2. 有两种interpretation,见上文补充。

d Minibatch Parsing部分,

代码27行minibatch = [parse for parse in partial_parses if len(parse.stack) > 1 or len(parse.buffer) > 0]

其中partial_parses应为minibatch

感谢指正