目录

![]() 最后一次练习先实现RBM的CD1训练,然后将其作为前馈网络预训练的最底层用于识别USPS手写数字。所有代码开源在:https://github.com/hankcs/coursera-neural-net 。

最后一次练习先实现RBM的CD1训练,然后将其作为前馈网络预训练的最底层用于识别USPS手写数字。所有代码开源在:https://github.com/hankcs/coursera-neural-net 。

编程之前

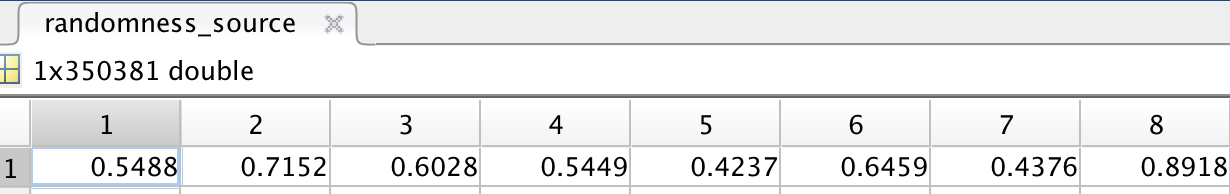

有些约定需要遵守,这次练习的随机数发生器是固定地从给定的a4_randomness_source.mat中读取浮点数:

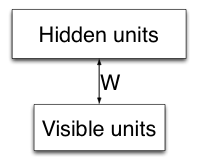

为了简单,所有单元都不使用偏置,RBM就是简单的:

二值单元的取值为0或1,但在矩阵运算中大部分时候储存的都是$P(v_i=1|h)$。

visible_state_to_hidden_probabilities

当有了一组可见单元的二值向量的时候,每个隐藏单元on的概率是多少呢?请完成这个函数:

@staticmethod def visible_state_to_hidden_probabilities(rbm_w, visible_state): """This takes in the (binary) states of the visible units, and returns the activation probabilities of the hidden units conditional on those states. Args: rbm_w (numpy.array) : is a matrix of size <number of hidden units> by <number of visible units> visible_state (numpy.array) : is a binary matrix of size <number of visible units> by <number of configurations that we're handling in parallel>. Returns: (numpy.array) : Activation probabilities of hidden units. size <number of visible units> by <number of configurations that we're handling in parallel>. """ return logistic(np.dot(rbm_w, visible_state))

由于每个隐藏单元都是独立的,所以单独计算每一个就行了。

hidden_state_to_visible_probabilities

有了隐藏单元的二值向量,RBM的连接是对称的,请用类似的手法计算可见单元on的概率。

@staticmethod def hidden_state_to_visible_probabilities(rbm_w, hidden_state): """This takes in the (binary) states of the hidden units, and returns the activation probabilities of the visible units, conditional on those states. Args: rbm_w (numpy.array) : a matrix of size <number of hidden units> by <number of visible units> hidden_state (numpy.array) : is a binary matrix of size <number of hidden units> by <number of configurations that we're handling in parallel>. Returns: (numpy.array) : Activation probabilities of visible units. size <number of visible units> by <number of configurations that we're handling in parallel>. """ return logistic(np.dot(rbm_w.T, hidden_state))

这里只需把rbm_w转置一下就行了。

configuration_goodness

有了所有单元的状态,就可以计算这个configuration的能量,及其相反数——“好感度”了:

@staticmethod def configuration_goodness(rbm_w, visible_state, hidden_state): """Computes negative energy. Args: rbm_w (numpy.array) : a matrix of size <number of hidden units> by <number of visible units> visible_state (numpy.array) : a binary matrix of size <number of visible units> by <number of configurations that we're handling in parallel>. hidden_state (numpy.array) : a binary matrix of size <number of hidden units> by <number of configurations that we're handling in parallel>. Returns: float: the mean over cases of the goodness (negative energy) of the described configurations. """ return np.mean(np.sum(np.dot(rbm_w, visible_state) * hidden_state, 0))

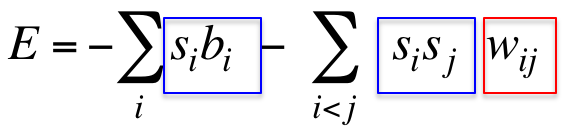

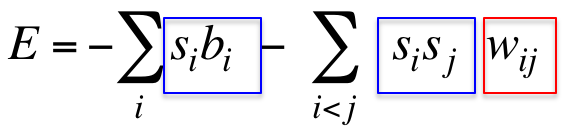

根据能量方程:

在没有偏置的情况下就是第二项了。由于有多个训练实例,所以这里取了平均。

configuration_goodness_gradient

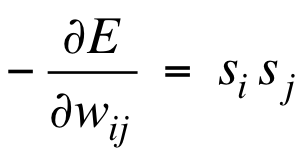

为了优化RBM,得实现梯度计算:

@staticmethod def configuration_goodness_gradient(visible_state, hidden_state): """Computes gradient of negative energy. Notes: * You don't need the model parameters for this computation. Args: visible_state (numpy.array) : is a binary matrix of size <number of visible units> by <number of configurations that we're handling in parallel>. hidden_state (numpy.array) : is a (possibly but not necessarily binary) matrix of size <number of hidden units> by <number of configurations that we're handling in parallel>. Returns: (numpy.array) : gradient of negative energy (same shape as as the model parameters) """ return np.dot(hidden_state, visible_state.T) / np.size(visible_state, 1)

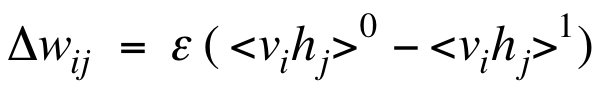

根据公式:

以及两个矩阵都包含多个configurations所以需要平均的事实,得到上述代码。

cd1

也就是Contrastive Divergence gradient estimator with 1 full Gibbs update:根据$p(h|v)$采样h;根据$p(v|h)$采样重建v;根据重建v上的$p(h|v)$采样h;最后根据得到的单元状态估计梯度。梯度计算会用上两次:

-

一旦给了数据和隐藏单元,立即用一次,以得到让数据“好感度”更大的方向,然后顺着这个方向走

-

然后在重建的可见单元和隐藏单元上用一次,以得到让重建的可见单元“好感度”更大的方向,然后背着这个方向走(远离虚构)

采样的时候利用提供的sample_bernoulli函数。

实现如下:

def _cd1(self, visible_data): """Implements single step contrastive divergence (CD-1). Args: visible_data (numpy.array) : is a (possibly but not necessarily binary) matrix of size <number of visible units> by <number of data cases> Returns: (numpy.array) : The returned value is the gradient approximation produced by CD-1. It's of the same shape as <rbm_w>. """ hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_data) hidden_states = self.sample_bernoulli(probabilities=hidden_probs) initial = self.configuration_goodness_gradient(visible_state=visible_data, hidden_state=hidden_states) visible_probs = self.hidden_state_to_visible_probabilities(rbm_w=self.rbm_w, hidden_state=hidden_states) visible_states = self.sample_bernoulli(probabilities=visible_probs) hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_states) hidden_states = self.sample_bernoulli(probabilities=hidden_probs) reconstruction = self.configuration_goodness_gradient(visible_state=visible_states, hidden_state=hidden_states) self.gradient = initial - reconstruction

最后一句对照公式

直截了当,没什么好说的。

改进CD-1

如果你仔细推敲上述代码,你会发现最后一次对隐藏状态的采样完全是多余的:

hidden_states = self.sample_bernoulli(probabilities=hidden_probs) reconstruction = self.configuration_goodness_gradient(visible_state=visible_states, hidden_state=hidden_states) self.gradient = initial - reconstruction

平均下来,这段代码并没有导致梯度改变,反而增大了梯度的方差。更大的方差意味着需要更小的学习率,也就意味着更慢的训练速度。总之,百害而无一利。改进方法是去掉采样,直接以概率作为“状态”:

def _cd1(self, visible_data): """Implements single step contrastive divergence (CD-1). Args: visible_data (numpy.array) : is a (possibly but not necessarily binary) matrix of size <number of visible units> by <number of data cases> Returns: (numpy.array) : The returned value is the gradient approximation produced by CD-1. It's of the same shape as <rbm_w>. """ hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_data) hidden_states = self.sample_bernoulli(probabilities=hidden_probs) initial = self.configuration_goodness_gradient(visible_state=visible_data, hidden_state=hidden_states) visible_probs = self.hidden_state_to_visible_probabilities(rbm_w=self.rbm_w, hidden_state=hidden_states) visible_states = self.sample_bernoulli(probabilities=visible_probs) hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_states) # hidden_states = self.sample_bernoulli(probabilities=hidden_probs) # Question 6 reconstruction = self.configuration_goodness_gradient(visible_state=visible_states, hidden_state=hidden_probs) self.gradient = initial - reconstruction

在实值像素强度数据上训练RBM

我们希望像上次练习那样在手写数字图像上训练RBM,但该数据不是二进制的,而是0到1之间的浮点数。解决方法是将该浮点数视作伯努利分布,对每个case都执行一次采样,于是就得到了二进制。于是加一句话:

def _cd1(self, visible_data): """Implements single step contrastive divergence (CD-1). Args: visible_data (numpy.array) : is a (possibly but not necessarily binary) matrix of size <number of visible units> by <number of data cases> Returns: (numpy.array) : The returned value is the gradient approximation produced by CD-1. It's of the same shape as <rbm_w>. """ visible_data = self.sample_bernoulli(probabilities=visible_data) # Question 8 hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_data) hidden_states = self.sample_bernoulli(probabilities=hidden_probs) initial = self.configuration_goodness_gradient(visible_state=visible_data, hidden_state=hidden_states) visible_probs = self.hidden_state_to_visible_probabilities(rbm_w=self.rbm_w, hidden_state=hidden_states) visible_states = self.sample_bernoulli(probabilities=visible_probs) hidden_probs = self.visible_state_to_hidden_probabilities(rbm_w=self.rbm_w, visible_state=visible_states) # hidden_states = self.sample_bernoulli(probabilities=hidden_probs) # Question 6 reconstruction = self.configuration_goodness_gradient(visible_state=visible_states, hidden_state=hidden_probs) self.gradient = initial - reconstruction

就行了。

将RBM作为前馈网络的一部分

上次练习中为了防止过拟合实在是下了一番苦功,但这次不必担心了,或者说反而需要让模型多花时间学习输入中的各种规律。反正由于没有监督信息,模型不会过于关注标签。

另外上次练习小心翼翼地挑选了迭代次数、隐藏单元数、正则惩罚因子……这次在无监督学习中完全不必担心,可以放心地用大一点的隐藏单元数,这会提供各种各样的feature detector,以供后面的fine tuning用。

input-to-hidden 权值来自预训练的RBM,hidden-to-class跟上次一样训练。一般情况下input-to-hidden权值也需要训练,但这不是必须的。

训练入口如下:

def a4_main(self, n_hid, lr_rbm, lr_classification, n_iterations, show_rbm_weights=False, train_momentum=0.9):

"""Runs training and computes error and loss of training, testing, and validation training sets.

"""

if np.prod(np.shape(self.data_sets)) != 1:

raise Exception('You must run a4_init before you do anything else.')

nn = self.build_neural_net_model(n_hid=n_hid, lr_rbm=lr_rbm, lr_net=lr_classification,

training_iters=n_iterations, n_classes=10,

train_momentum=train_momentum, n_visible=256, mini_batch_size=100,

show_rbm_weights=show_rbm_weights)

nn.train(self.data_sets['training'])

build_neural_net_model中,先训练RBM:

def build_neural_net_model(self, n_hid, lr_rbm, lr_net, training_iters, n_classes, train_momentum, n_visible, mini_batch_size, show_rbm_weights): """Runs pre-training and returns neural network model. Args: n_hid (int) : number of hidden units lr_rbm (float) : learning rate for RBM model lr_net (float) : learning rate for neural net classifier training_iters (int) : number of training iterations n_classes (int) : number of classes train_momentum (float) : momentum used in training n_visible (int) : number of visible units mini_batch_size (int) : size of training batches show_rbm_weights (bool) : display rbm weights in colour plot with same dimension of rbm_w Returns: FFNeuralNet : instance of feedforward neural network classifier """ rbm = RBM(training_iters=training_iters, lr_rbm=lr_rbm, n_hid=n_hid, n_visible=n_visible, train_momentum=train_momentum, mini_batch_size=mini_batch_size) rbm.train(self.data_sets['training']) if show_rbm_weights: self.show_rbm(rbm.rbm_w) return FFNeuralNet(training_iters=training_iters, rbm_w=rbm.rbm_w, lr_net=lr_net, n_hid=n_hid, n_classes=n_classes, train_momentum=train_momentum, mini_batch_size=mini_batch_size)

rbm的训练中用上了momentum:

def train(self, sequences): """Implements optimize(..) from assignment. This trains a model that's defined by a single matrix of weights. Notes: * This uses mini-batches of size 100, momentum of 0.9, no weight decay, and no early stopping. Args: model_shape (tuple) : is the shape of the array of weights. gradient_function : a function that takes parameters <model> and <data> and returns the gradient (or approximate gradient in the case of CD-1) of the function that we're maximizing. Note the contrast with the loss function that we saw in PA3, which we were minimizing. The returned gradient is an array of the same shape as the provided <model> parameter. Returns: numpy.array : matrix of weights of the trained model """ self.reset_classifier() momentum_speed = np.zeros(self.model_shape) for i, mini_batch_x in enumerate(batches(sequences['inputs'], self.mini_batch_size)): if i >= self.n_iterations: break self.fit(mini_batch_x) momentum_speed = self.train_momentum * momentum_speed + self.gradient self.rbm_w += momentum_speed * self.lr_rbm

这里的fit其实就是对CD1的调用:

def fit(self, X): """Fit a model using one step Contrastive Divergence CD-1. """ self._cd1(visible_data=X) return self

回到nn的训练中来,剩下的训练与上次练习类似,但只训练hid_to_class的参数:

def train(self, sequences): """Implements optimize(..) from assignment. This trains a hid_to_class. Notes: * This uses mini-batches of size 100, momentum of 0.9, no weight decay, and no early stopping. Args: model_shape (tuple) : is the shape of the array of weights. gradient_function : a function that takes parameters <model> and <data> and returns the gradient (or approximate gradient in the case of CD-1) of the function that we're maximizing. Note the contrast with the loss function that we saw in PA3, which we were minimizing. The returned gradient is an array of the same shape as the provided <model> parameter. Returns: (numpy.array) : matrix of weights of the trained model (hid_to_class) """ self.reset_classifier() # calculate the hidden layer representation of the labeled data, rbm_w is input_to_hid hidden_representation = logistic(np.dot(self.rbm_w, sequences['inputs'])) momentum_speed = np.zeros(self.model_shape) for i, (mini_batch_x, mini_batch_y) in enumerate(zip(batches(hidden_representation, self.mini_batch_size), batches(sequences['targets'], self.mini_batch_size))): if i >= self.n_iterations: break self.fit(mini_batch_x, mini_batch_y) momentum_speed = self.train_momentum * momentum_speed + self.d_phi_by_d_input_to_class self.model += momentum_speed * self.lr_net

其中,rbm_w直接作为了input_to_hid的权值。

搜索一些最佳学习率:

# Q8 lr = .005 # all_lr = [lr/4, lr/2, lr/1.5, lr/1.25, lr*1.25, lr*1.5, lr*2, lr*4, lr*6, lr*8, lr*10] all_lr = [lr * 15, lr * 20, lr * 25, lr * 27] for lr_ in all_lr: print "LEARNING RATE: ", lr_ a4.a4_main(300, .02, lr_, 1000, show_rbm_weights=True) print

根据validation data classification cross-entropy loss,最佳大约是:

LEARNING RATE: 0.135 For the test data, the classification cross-entropy loss is 2.09475376588, and the classification error rate (i.e. the misclassification rate) is 0.668777777778 For the training data, the classification cross-entropy loss is 2.09147911773, and the classification error rate (i.e. the misclassification rate) is 0.661 For the validation data, the classification cross-entropy loss is 2.10209070042, and the classification error rate (i.e. the misclassification rate) is 0.694

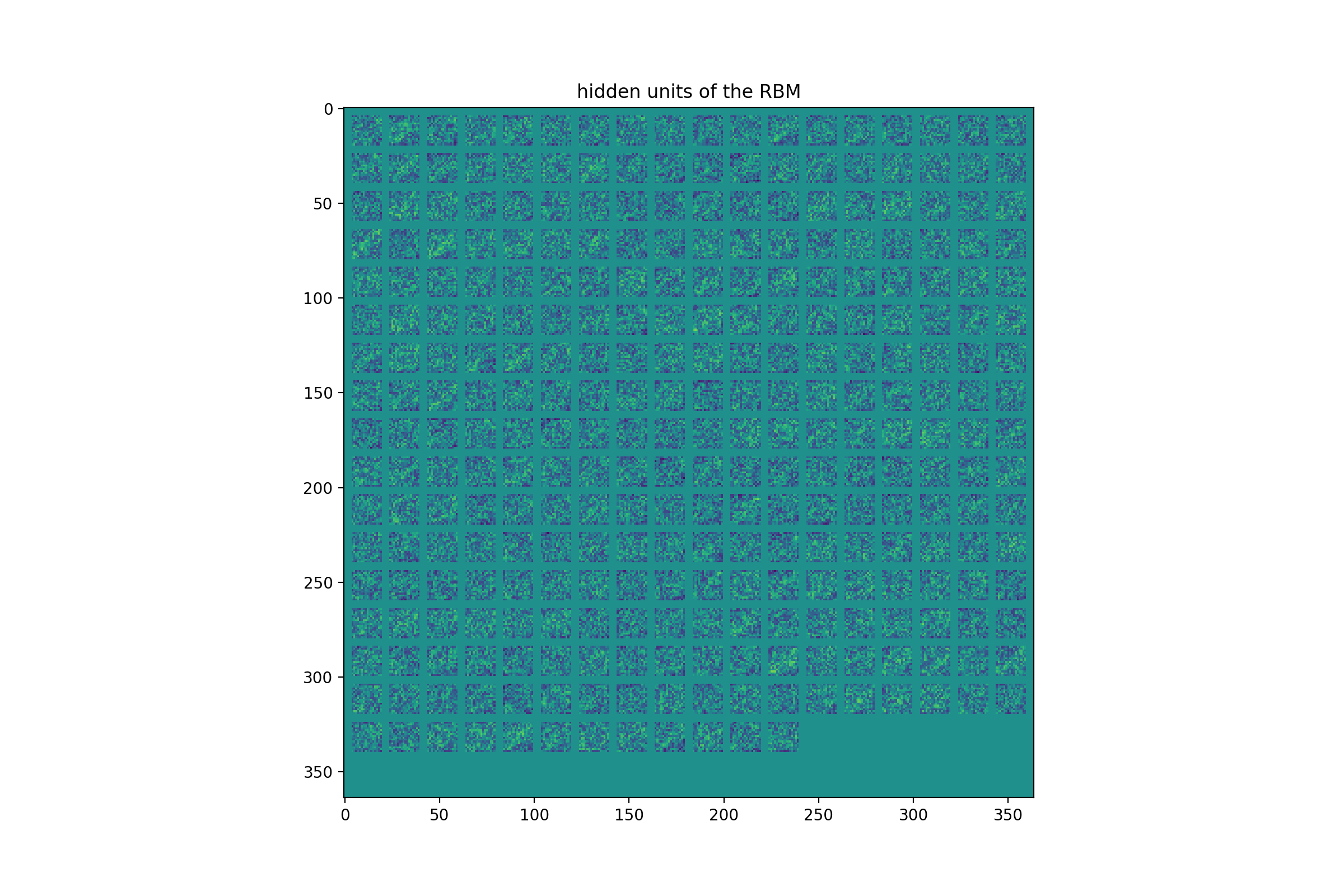

可视化隐藏单元

可视化直接将每个权值作为16*16的像素强度:

def show_rbm(self, rbm_w):

"""Display rbm weights in colour plot with same dimension of rbm_w.

"""

n_hid = np.size(rbm_w, 0)

n_rows = int(np.ceil(np.sqrt(n_hid)))

blank_lines = 4

distance = 16 + blank_lines

to_show = np.zeros([n_rows * distance + blank_lines, n_rows * distance + blank_lines])

for i in xrange(0, n_hid):

row_i = int(i / n_rows) # take floor

col_i = int(i % n_rows)

pixels = np.reshape(rbm_w[i, :], (16, 16)).T

row_base = row_i * distance + blank_lines

col_base = col_i * distance + blank_lines

to_show[row_base:row_base + 16, col_base:col_base + 16] = pixels

extreme = np.max(abs(to_show))

try:

plt.imshow(to_show, vmin=-extreme, vmax=extreme)

plt.title('hidden units of the RBM')

plt.show()

except:

print('Failed to display the RBM. No big deal (you do not need the display to finish the assignment), '

'but you are missing out on an interesting picture.')

raise

似乎隐约有一些轮廓在里面。

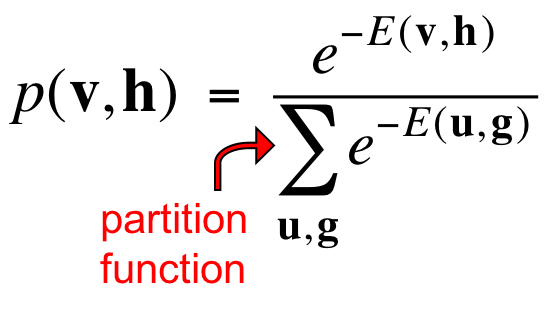

partition function

这是一道附加题,很难,没有给任何提示,是为那些富有挑战精神的人准备的。

对于2个可见单元1个隐藏单元的RBM,一共有8种configuration,partition function的计算不是问题。但对于复杂的RBM,这是个大问题。请实现该函数并计算给定10个隐藏单元256个可见单元的partition function的对数(总共$10+256=266$个单元,也就是$2^{266}$种configuration)。

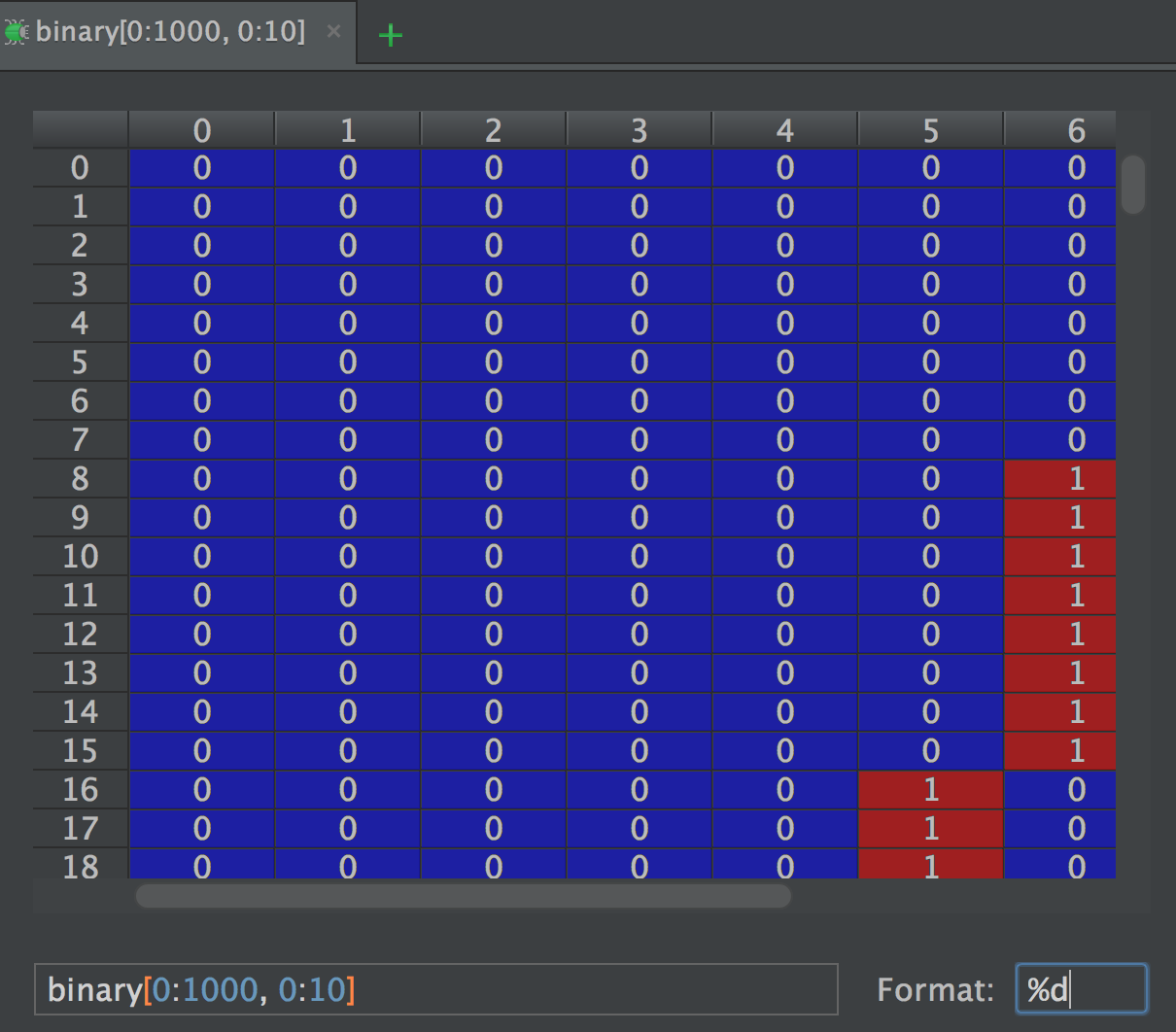

首先生成10个隐藏单元的弧上所有(1024种)组合:

dec_2_bin = lambda x, n_bits: np.array(["{0:b}".format(val).zfill(n_bits) for val in x])

binary = np.array([list(val) for val in dec_2_bin(range(pow(2, np.size(w, 0))), np.size(w, 0))], dtype=float)

但总共不是有$10+256=266$个单元吗?不是应该有$2^{266}$种configuration吗?

其实两者是等效的,就算一共有$2^{266}$种configuration,但对10个隐藏单元来讲,$S_iS_j$的乘积要么是0要么是1,由于i有10个,且RBM是受限制的非稠密连接(只有$h \leftrightarrow v$的连接),所以$S_iS_j$一共只有1024种。

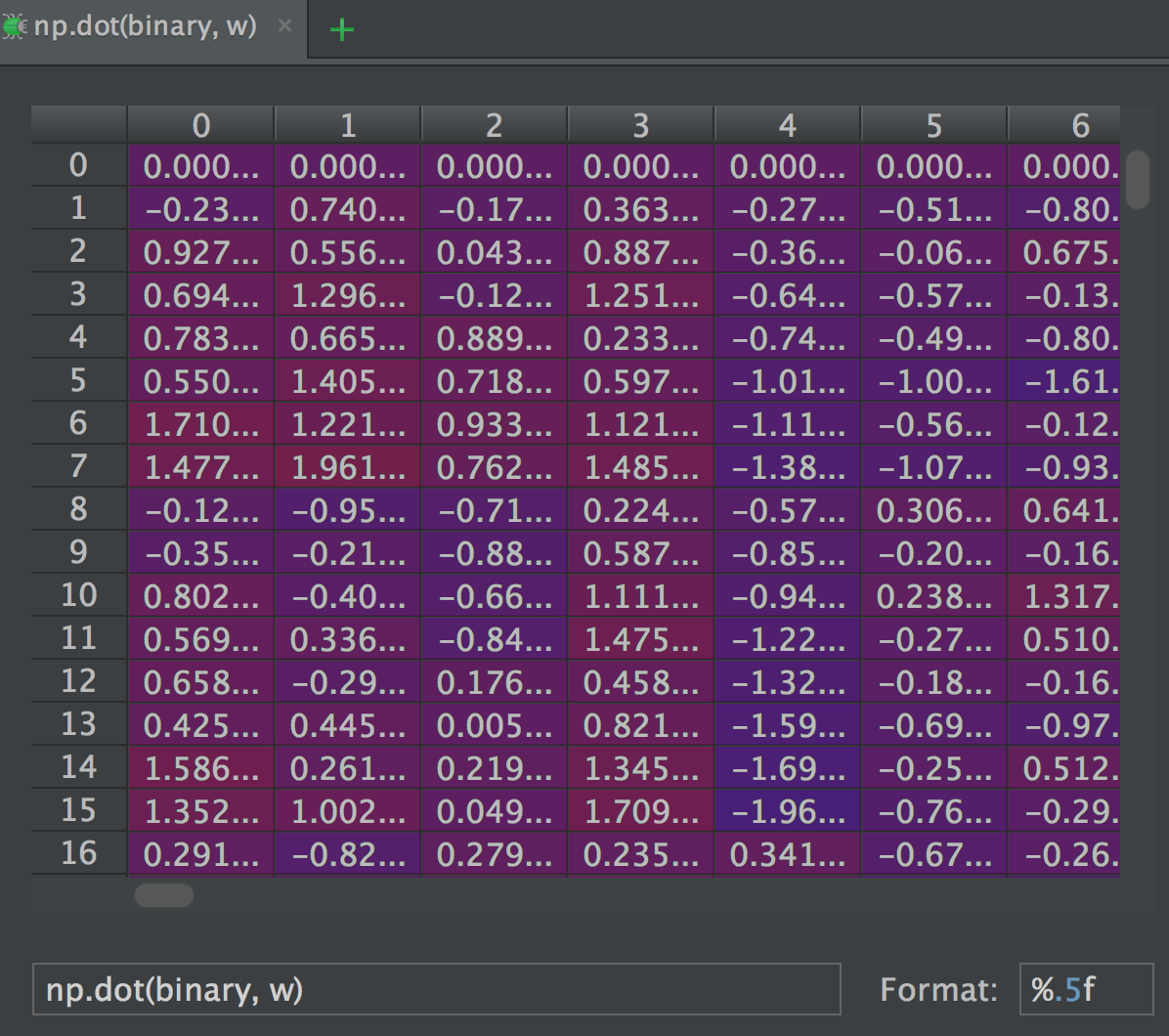

然后根据能量方程:

每个蓝框必须乘以权值:

np.dot(binary, w)

这一步得到(1024, 256)的矩阵:

partition function中指数函数的幂上的求和可以看成乘法:

np.prod((np.exp(np.dot(binary, w)) + 1).T, axis=0)

得到一个1024的行向量,加一是因为接下来要做$\log$运算,而$\log0$是非法的。

此时得到的是1024个蓝框对应的$e^{-E(h,v)}$,将其求和取对数得到最终所求。完整的代码如下:

@staticmethod

def partition_log(w):

"""Computes logarithm (base e) of partition function for given size of rbm (hidden units)

Notes:

* Answer for question 10

Args:

w (numpy.array) : given rbm weight matrix

Returns:

float : log of partition function

"""

dec_2_bin = lambda x, n_bits: np.array(["{0:b}".format(val).zfill(n_bits) for val in x])

binary = np.array([list(val) for val in dec_2_bin(range(pow(2, np.size(w, 0))), np.size(w, 0))], dtype=float)

return np.log(np.sum(np.prod((np.exp(np.dot(binary, w)) + 1).T, axis=0)))

至此结束了Neural Networks for Machine Learning课程的所有编程练习,恭喜通关!

![]() 知识共享署名-非商业性使用-相同方式共享:码农场 » Hinton神经网络公开课编程练习4 Restricted Boltzmann Machines

知识共享署名-非商业性使用-相同方式共享:码农场 » Hinton神经网络公开课编程练习4 Restricted Boltzmann Machines

码农场

码农场

我想通了,哈哈。+1的根本原因在于如果不加1,则只考虑了v节点为1的情况,而加1的话,就考虑到了v节点为0的情况。

感谢指正!你的思路是先固定v,然后考虑h的取值组合。然而v有256个,除了全部为0或全部为1的情况外,还存在部分为0部分为1的情况。如何用你的方法解释呢?

楼主,+1的原因不是因为要做log运算吧?因为即使不加1,exp指数结果最低为1啊,不可能出现log0的现象。这里+1应该有更深层次的原因,求解。